Recently we had a client that had some performance issues with a Joomla installation. The site wasn’t getting an incredible amount of traffic, but, the traffic it was getting was just absolutely overloading the server.

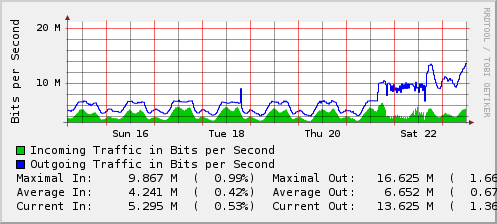

Since the machine hadn’t been having issues before, the first thing we did was contact the client and ask what had changed. We already knew the site and database that was using most of the CPU time, but, the bandwidth graph didn’t suggest that it was traffic overrunning the server. Our client rescued this client from another hosting company because the site was unusable in during prime time. So, we’ve inherited a problem. During the move, the site was upgraded from 1.0 to 1.5, so, we didn’t even have a decent baseline to revert to.

The stopgap solution was to move the .htaccess mod_rewrite rules into the apache configuration which helped somewhat. We identified a few sections of the code that were getting hit really hard and wrote a mod_rewrite rule to serve those images direct from disk — bypassing Joomla serving those images through itself. This made a large impact and at least got the site responsive enough that we could leave it online and work through the admin to figure out what had gone wrong.

Some of the modules that had been enabled contributed to quite a bit of the performance headache. One chat module generated 404s every second for each person logged in to see if there were any pending messages. Since Joomla is loaded for each 404 file, this added quite a bit of extra processing. Another quick modification to the configuration eliminated dozens of bad requests. At this point, the server is responsive, the client is happy and we make notes in the trouble ticket system and our internal documentation for reference.

Three days later the machine alerts and our load problem is back. After all of the changes, something is still having problems. Upon deeper inspection, we find that portions of the system dealing with the menus are being recreated each time. There’s no built in caching, so, the decision is to try Varnish. Varnish has worked in the past for WordPress sites that have gotten hit hard, so, we figured if we could cache the images, css and some of the static pages that don’t require authentication, we can get the server to be responsive again.

Apart from the basic configuration, our varnish.vcl file looked like this:

sub vcl_recv {

if (req.http.host ~ "^(www.)?domain.com$") {

set req.http.host = "domain.com";

}

if (req.url ~ "\.(png|gif|jpg|ico|jpeg|swf|css|js)$") {

unset req.http.cookie;

}

}

sub vcl_fetch {

set obj.ttl = 60s;

if (req.url ~ "\.(png|gif|jpg|ico|jpeg|swf|css|js)$") {

set obj.ttl = 3600s;

}

}

To get the apache logs to report the IP, you need to modify the VirtualHost config to log the forwarded IP.

The performance of the site after running Varnish in front of Apache was quite good. Apache was left with handling only .php and the server is again responsive. It runs like this for a week or more without any issues and only a slight load spike here or there.

However, Joomla doesn’t like the fact that every request’s REMOTE_ADDR is 127.0.0.1 and some addons stop working. In particular an application that allows the client to upload .pdf files into a library requires a valid IP address for some reason. Another module to add a sub-administration panel for a manager/editor also requires an IP address other than 127.0.0.1.

With some reservation, we decide to switch to Nginx + FastCGI which removes the reverse proxy and should fix the IP address problems.

Our configuration for Nginx with Joomla:

server {

listen 66.55.44.33:80;

server_name www.domain.com;

rewrite ^(.*) http://domain.com$1 permanent;

}

server {

listen 66.55.44.33:80;

server_name domain.com;

access_log /var/log/nginx/domain.com-access.log;

location / {

root /var/www/domain.com;

index index.html index.htm index.php;

if ( !-e $request_filename ) {

rewrite (/|\.php|\.html|\.htm|\.feed|\.pdf|\.raw|/[^.]*)$ /index.php last;

break;

}

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /var/www/nginx-default;

}

location ~ \.php$ {

fastcgi_pass unix:/tmp/php-fastcgi.socket;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /var/www/domain.com/$fastcgi_script_name;

include fastcgi_params;

}

location = /modules/mod_oneononechat/chatfiles/ {

if ( !-e $request_filename ) {

return 404;

}

}

}

With this configuration, Joomla was handed any URL for a file that didn’t exist. This was to allow the Search Engine Friendly (SEF) links. The second 404 handler was to handle the oneononechat module which looks for messages destined for the logged in user.

With Nginx, the site is again responsive. Load spikes occur from time to time, but, the site is stable and has a lot less trouble dealing with the load. However, once in a while the load spikes, but, the server seems to recover pretty well.

However, a module called Rokmenu which was included with the template design appears to have issues. Running php behind FastCGI sometimes gives different results than running as mod_php and it appears that Rokmenu is relying on the path being passed and doesn’t normalize it properly. So, when the menu is generated, with SEF on or off, urls look like /index.php/index.php/index.php/components/com_docman/themes/default/images/icons/16×16/pdf.png.

Obviously this creates a broken link and causes more 404s. We installed a fresh Joomla on Apache, imported the data from the copy running on Nginx, and Apache with mod_php appears to work properly. However, the performance is quite poor.

In order to troubleshoot, we made a list of every addon and ran through some debugging. With apachebench, we wrote up a quick command line that could be pasted in at the ssh prompt and decided upon some metrics. Within minutes, our first test revealed 90% of our performance issue. Two of the addons required compatibility mode because they were written for 1.0 and hadn’t been updated. Turning on compatibility mode on our freshly installed site resulted in 10x worse performance. As a test, we disabled the two modules that relied on compatibility mode and turned off compatibility mode and the load dropped immensely. We had disabled SEF early on thinking it might be the issue, but, we found the performance problem almost immediately. Enabling other modules and subsequent tests showed marginal performance changes. Compatibility mode was our culprit the entire time.

The client started a search for two modules to replace the two that required compatibility mode and disabled them temporarily while we moved the site back to Apache to fix the url issue in Rokmenu. At this point, the site was responsive, though, pageloads with lots of images were not as quick as they had been with Nginx or Varnish. At a later point, images and static files will be served from Nginx or Varnish, but, the site is fairly responsive and handles the load spikes reasonably well when Googlebot or another spider hits.

In the end the site ended up running on Apache because Varnish and Nginx had minor issues with the deployment. Moving to Apache alternatives doesn’t always fix everything and may introduce side-effects that you cannot work around.