Nginx to Apache?

A few months ago we had a client that wanted to run Nginx/FastCGI rather than Apache because it was known to be faster. While we’ve had extensive experience performance tuning various webserver combinations, the workload proposed would really have been better served with Apache. While we inherited this problem from another hosting company — he moved because they couldn’t fix the performance issues — he maintained that Nginx/FastCGI for PHP was the fastest because of all of the benchmarks that had been run on the internet.

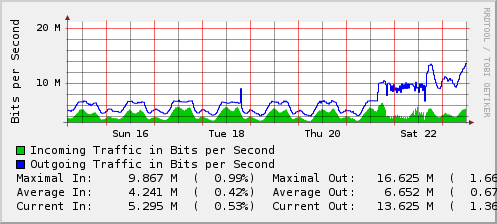

While the conversion to or from one server to another is usually painful, much of the pain can be avoided by running Apache on an alternate port, testing, then, swapping the configuration around. The graph below shows when we changed from Nginx to Apache:

We made the conversion from Nginx to Apache on Friday. Once we made the conversion, there were issues with the machine which was running an older kernel. After reviewing the workload, we migrated from 2.6.31.1 with the Anticipatory Scheduler to 2.6.34 with the Deadline Scheduler. Three other machines had been running 2.6.33.1 with the CFQ scheduler and showed no issues at the 10mb/sec mark, but, we felt that we might benchmark his workload using deadline. We’ve run a number of high-end webservers with both Anticipatory and CFQ prior to 2.6.33 and for most of our workloads, Anticipatory seemed to win. With 2.6.33, Anticipatory was removed, leaving NOOP, CFQ and Deadline. While we have a few MySQL servers running Deadline, this is probably the first heavy-use webserver that we’ve moved from CFQ/AS to Deadline.

The dips in the daily graph were during times where a cron job was running. The two final dips were during the kernel installation.

All in all, the conversion went well. The machine never really appeared to be slow, but, it is obvious that it is now handling more traffic. The load averages are roughly the same as they were before. CPU utilization is roughly the same, but, more importantly, Disk I/O is about half what it was and System now hovers around 3-4%. During the hourly cron job, the machine is not having issues like it was before.

Nginx isn’t always the best solution. In this case, 100% of the traffic is serving an 8k-21k php script to each visitor. Static content is served from another machine running Nginx.

While I do like Nginx, it is always best to use the right tool for the job. In this case, Apache happened to be the right tool.

May 23rd, 2010 at 1:06 pm

[…] Przeczytaj artykuł: Nginx to Apache? […]

May 23rd, 2010 at 8:24 pm

[…] więcej: Nginx to Apache? Tags: conversion, conversion-from, friday, friday-once, issues-with, made-the-conversion, […]

May 26th, 2010 at 8:15 am

[…] This post was mentioned on Twitter by Rares Mirica, Chris Davies. Chris Davies said: blog: Nginx to Apache? http://cli.gs/LN8av #Apache #deadline #fastcgi […]

June 7th, 2010 at 12:56 pm

i’m sorry but i didn’t get your point that is why you moved to apache from nginx and why apache performs well.

some guesses:

* does it because of scheduler is better with apache?

* does it because of kernel is better with apache?

* for 8k-21k php scripts apache serves better?

* maybe if that client is using mysql maybe php/mysql on apache better?

i have read a number of your posts you’re fairly detail oriented tester.

i hope you elaborate more in a detailed post or comment. for example, \CPU utilization is roughly the same, but, more importantly, Disk I/O is about half what it was\ is contrary to countless other benchmarks.

June 7th, 2010 at 3:43 pm

There were a number of issues with Nginx -> php-cgi. One required recompiling php to increase the listen backlog from its default of 128. While recompiling php-cgi eliminated most of the 502 Bad Gateway Errors, when the request load increased, we would still see them.

Diagnosing the machine saw that the machine was CPU bound at times and was spending most of its time running the php scripts. In this case, Apache with mod_php eliminated the cgi bottleneck and it was able to process more transactions. This introduced a problem with the Anticipatory elevator scheduler on that machine. Three other machines in that cluster were already running 2.6.33 with the CFQ scheduler and weren’t seeing the same issues that this machine was seeing. We moved the machine to the Deadline scheduler with a new kernel upgrade which eliminated the 10mb/sec ‘cap’ we had been seeing after the switch to Apache from Nginx. We verified that the 10mb/sec was not an ethernet port problem prior to this, but, the machine was IO Bound due to the scheduler, not due to the disk fabric.

The only thing changed on Friday was replacing Nginx/PHP-FastCGI with Apache2-mpm-prefork/mod_php. Instantly, the machine started serving more traffic. While I thought this might have been related to a configuration issue in nginx/php, nothing stood out in the configuration options. Once Apache2/mod_php was running, we ran into the issue with the scheduler.

Since it is a production system, we’re unable to do a lot of testing or tweaking when things are working. I did maintain the config files and some logs so that we could duplicate things on a benchmark system, but, haven’t had too much time to go back into things.

To answer your points in order:

* The scheduler didn’t appear to affect nginx/php-cgi versus apache/mod_php performance. I believe the issue was based on the high concurrence of php scripts being run and the fact that the php-cgi gateway introduces overhead that mod_php avoids. 100% of the transactions were processed with php-cgi and we had to increase the php backlog to 2048 just to keep the site functioning.

* The nginx -> apache swap happened without a reboot/kernel upgrade. Apache immediately started handling more traffic and started exhibiting other problems about 4 hours later due to the scheduler. While we did some benchmarking, we found that for their load, the deadline scheduler worked better, and scheduled a kernel upgrade for each of the machines in their cluster.

* This software system has 21000 php scripts that range in size from 6k to 151k averaging 40k with 600-1200 requests per second per machine with 80% of the requests hitting scripts that are 120k or more. MySQL on each satellite averages 2300 transactions per second. As Linux will cache the frequently used scripts in memory in both cases, and the scripts run the same once the php interpreter gets the script, the only difference is the method that was used to invoke php — embedded or through the fastcgi gateway.

* The software stack on the machine was Nginx/FastCGI PHP/MySQL or Apache2-mpm-prefork/mod_php/MySQL.

After the conversion CPU utilization dropped, Disk IO stayed roughly the same. I imagine most of the CPU issue was related to the Nginx/FastCGI gateway. While we have multiple other machines running Nginx/FastCGI, I think this particular combination just pushed things beyond the breaking point. As the installation has been working fine on Apache since the post and we are upgrading the machines in that cluster this week, I don’t imagine we’ll switch back until we hit the next bottleneck.

When fixing production problems, we don’t get a lot of time to do benchmarking. After the upgrade, we saw two things. CPU dropped about 15% and the bandwidth graphs jumped considerably. The client’s statistics jumped by a similar amount suggesting that the machines were now processing more traffic than they had been.

June 8th, 2010 at 3:19 am

Thank you very much for the comments. As you said it’s probably because of more latency nginx setups have.

I’m sure you have heard these all but I just want to remind you. php-fpm for FCGI is a better implementation. I haven’t tried with this kind of load but it has pooling, for example, that can aid. PHP will include php-fpm by default in 5.4.

Another project that can help is HipHop from Facebook guys. However, it has one main drawback that you cannot use it with an existing web server, it has its own. HipHop compiles scripts to C++ and is used in production at FB.

Also, an opcode compiler can be used. Eaccelerator is said to be stable with 5.2 and work better than before in high load with 0.9.6. APC was used in FB before HipHop. But if server is not CPU-bound then you may not want to put an extra layer.

June 8th, 2010 at 10:38 am

Due to the nature of their scripts, eaccelerator, apc and xcache all had issues and were slower when running their traffic. XCache showed the most promise, but, their gut feeling was that it was slower. I tried fpm briefly on another machine, but, based on a few simple benchmarks, moving back to Apache seemed to be the quickest fix. Of course once it is working, it drops off the radar quite quickly.

HipHop took 70 seconds to compile their smallest script and they had 18774 scripts which are updated hourly. Their 35k script ended up being 26mb, and those scripts are mirrored to their satellites on an hourly basis. Their current architecture just wouldn’t have worked with HipHop.

Their application doesn’t do any caching other than the one hour static php file written for each client, and that script does some absolutely horrendous calculations. To make it worse, it is very difficult to follow the code to figure out how and where to optimize, so, it becomes cheaper to just throw CPU at it.