When to Cache, What to Cache, How to Cache

Tuesday, June 21st, 2011This post is a version of the slideshow presentation I did at Hack and Tell in Fort Lauderdale, Florida at The Collide Factory on Saturday, April 2, 2011. These are 5 minute talks where each slide auto-advances after fifteen seconds which limits the amount of detail that can be conveyed.

A brief introduction

What makes a page load quickly? While we can look at various metrics, there are quite a few things that impact pageloads. While the page can be served quickly, the design of the page can often times impact the way that the page is rendered in the browser which can make a site appear to be sluggish. However, we’re going to focus on the mechanics of what it takes to get a page to serve quickly.

The Golden Rule – do as few calculations as possible to hand content to your surfer.

But my site is dynamic!

Do you really need to calculate the last ten posts entered on your blog every time someone visits the page? Surely you could cache that and purge the cache when a new post is entered. When someone adds a new comment, purge the cache and let it be recalculated once.

But my site has user personalization!

Can that personalization be broken into it’s own section of the webpage? Or, is it created by a cacheable function within your application? Even if you don’t support fragment caching on the edge, you can emulate that by caching your expensive SQL queries or even portions of your page.

Even writing a generated file to a static file and allowing your webserver to serve that static file provides an enormous boost. This is what most of the caching plugins for WordPress do. However, they are page caching, not fragment caching, which means that the two most expensive queries that WordPress executes, Category list and Tag Cloud, are generated each time a new page is hit until that page is cached.

One of the problems with high performance sites is the never-ending quest for that Time to First Byte. Each load balancer or proxy in front adds some latency. It also means a page needs to be pre-constructed before it is served, or, you need to do a little trickery. This eliminates being able to do any dynamic processing on the page in order to hand a response back as quickly as possible unless you’ve got plenty of spare computing horsepower.

With this, we’re left with a few options to have a dynamic site that has the performance of a statically generated site.

Amazon was one of the first to embrace the Page and Block method by using Mason, a mod_perl based framework. Each of the blocks on the page was generated ahead of time, and only the personalized blocks were generated ‘late’. This allowed the frontend to assemble these pieces, do minimal work to display the personal recommendations and present the page quickly.

Google took a different approach by having an immense amount of computing horsepower behind their results. Google’s method probably isn’t cost effective for most sites on the Internet.

Facebook developed bigpipe which generates pages and then dynamically loads portions of the page into the DOM units. This makes the page load quickly, but in stages. The viewer sees the rough page quickly as the rest of the page fills in.

The Presentation begins here

Primary Goal

Fast Pageloads – We want the page to load quickly and render quickly so that the websurfer doesn’t abandon the site.

Increased Scalability – Once we get more traffic, we want the site to be able to scale and provide websurfers with a consistent, fast experience while the site grows.

Metrics We Use

Time to First Byte – This is a measure of how quickly the site responds to an incoming request and starts sending the first byte of data. Some sites have to take time to analyze the request, build the page, etc before sending any data. This lag results in the browser sitting there with a blank screen.

Time to Completion – We want the entire page to load quickly enough that the web surfer doesn’t abandon. While we can do some tricky things with chunked encoding to fool websurfers into thinking our page loads more quickly than it really does, for 95% of the sites, this is a decent metric.

Number of Requests – The total number of requests for a page is a good indicator of overall performance. Since most browsers will only request a handful of static assets from a page per hostname, we can use a CDN, embed images in CSS or use Image Sprites to reduce the number of requests.

Why Cache?

Expecting Traffic

When we have an advertising campaign or holiday promotion going on, we don’t know what our expected traffic level might be, so, we need to prepare by having the caching in place.

Receiving Traffic

If we receive unexpected publicity, or our site is listed somewhere, we might cache to allow the existing system to survive a flood of traffic.

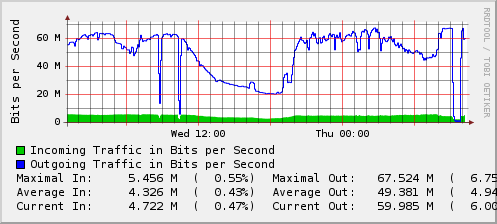

Fighting DDOS

When fighting a Distributed Denial of Service Attack, we might use caching to avoid the backend servers from getting overloaded.

Expecting Traffic

There are several types of caching we can do when we expect to receive traffic.

* Page Cache – Varnish/Squid/Nginx provide page caching. A static copy of the rendered page is held and updated from time to time either by the content expiring or being purged from the cache.

* Query Cache – MySQL includes a query cache that can help on repetitive queries.

* Wrap Queries with functions and cache – We can take our queries and write our own caching using a key/value store, avoiding us having to hit the database backend.

* Wrap functions with caching – In Python, we can use Beaker to wrap a decorator around a function which does the caching magic for us. Other languages have similar facilities.

Receiving Traffic

* Page Caching – When we’re receiving traffic, the easiest thing to do is to put a page cache in place to save the backend/database servers from getting overrun. We lose some of the dynamic aspects, but, the site remains online.

* Fragment Caching – With fragment caching, we can break the page into zones that have separate expiration times or can be purged separately. This can give us a little more control over how interactive and dynamic the site appears while it is receiving traffic.

DDOS Handling

* Slow Client/Vampire Attacks – Certain DDOS attacks cause problems with some webserver software. Recent versions of Apache and most event/poll driven webservers have protection against this.

* Massive Traffic – With some infrastructures, we’re able to filter out the traffic ahead of time – before it hits the backend.

Caching Easy, Purging Hard

Caching is scaleable. We can just add more caching servers to the pool and keep scaling to handle increased load. The problem we run into is keeping a site interactive and dynamic as content needs to be updated. At this point, purging/invalidating cached pages or regions requires communication with each cache.

Page Caching

Some of the caching servers that work well are Varnish, Squid and Nginx. Each of these allows you to do page caching, specify expire times, and handle most requests without having to talk to the backend servers.

Fragment Caching

With Edge Side Includes or a Page and Block Construction can allow you to cache pieces of the page as shown in the following diagram. With this, we can individually expire pieces of the page and allow our front end cache, Varnish, to reassemble the pieces to serve to the websurfer.

http://www.trygve-lie.com/blog/entry/esi_explained_simple

Cache Methods

* Hardware – Hard drives contain caches as do many controller cards.

* SQL Cache – adding memory to keep the indexes in memory or enabling the SQL query cache can help.

* Redis/Memcached – Using a key/value store can keep requests from hitting rotational media (disks)

* Beaker/Functional Caching – Either method can use a key/value store, preferably using RAM rather than disk, to prevent requests from having to hit the database backend.

* Edge/Frontend Caching – We can deploy a cache on the border to reduce the number of requests to the backend.

OS Buffering/Caching

* Hardware Caching on drive – Most hard drives today have caches – finding one with a large cache can help.

* Caching Controller – If you have a large ‘hot set’ of data that changes, using a caching controller can allow you to put a gigabyte or more RAM to avoid having to hit the disk for requests. Make sure you get the battery backup card just in case your machine loses power – those disk writes are often reported as completed before they are physically written to the disk.

* Linux/FreeBSD/Solaris/Windows all use RAM for caching

MySQL Query Cache

The MySQL Query cache is simple yet effective. It isn’t smart and doesn’t cache based on query plan, but, if your code base executes queries where the arguments are in the same order, it can be quite a plus. If you are dynamically creating queries, assembling the queries to try and keep the conditions in the same order will help.

Redis/Memcached

* Key Value Store – you can store frequently requested data in memory.

* Nginx can read rendered pages right from Memcached.

Both methods use RAM rather than hitting slower disk media.

Beaker/Functional Caching

With Python, we can use the Beaker decorator to specify caching. This insulates us from having to write our own handler.

Edge/Front End Caching

* Define blocks that can be cached, portions of the templates.

* Page Caching

* JSON (CouchDB) – Even JSON responses can run behind Varnish.

* Bigpipe – Cache the page, and allow javascript to assemble the page.

Content Delivery Network (CDN)

When possible, use a Content Delivery Network to store static assets off net. This adds a separate hostname and sometimes a separate domain name which allows most browsers to fetch more resources at the same time. Preferably you want to use a separate domain name that won’t have any cookies set – which cuts down on the size of the request object sent from the browser to the server with the static assets.

Bigpipe

Facebook uses a technology called Bigpipe which caches the page template and the javascript required to build the page. Once that has loaded, Javascript fetches the data and builds the page. Some of the json data requested is also cached, leading to a very compact page being loaded and built while you’re viewing the page.

Google’s Answer

Google has spent many years building a tremendous distributed computer. When you request a site, their frontend servers use a deadline scheduler and request blocks from their advertising, personalization, search results and other page blocks. The page is then assembled and returned to the web surfer. If any block doesn’t complete quickly enough, it is left out from assembly – which motivates the advertising department to make sure their block renders quickly.

What else can we do?

* Reduce the number of calculations required to serve a page

* Reduce the number of disk operations

* Reduce the network Traffic

In general, do as few calculations as possible while handing the page to the surfer.